VeriWave’s WaveDeploy: An Introduction and Review

By CWNP On 09/23/2010 - 25 Comments

If you’ve been keeping up with recent industry news, vendor tests, and analyst articles, you may have noticed the seemingly ubiquitous presence of VeriWave. If you don’t know them already, VeriWave specializes in WLAN product testing and network validation tools/suites, and after looking through their product line, it won’t surprise you that many vendors depend on them for WLAN product assurance and competitive testing. Take a look around at some of their recent mentions.

Validating Cisco DFS performance: http://www.youtube.com/watch?v=nJwMW_z9gwU

Aruba ARM review: http://tinyurl.com/28wvneg

Network World iPhone4 test: http://www.networkworld.com/news/2010/090710-iphone4-wifi-university.html?page=2

There’s plenty more, just search VeriWave’s name in your favorite search engine.

Whether you are a manufacturer of WLAN silicon, clients, or APs, a service provider, an integrator/VAR, or an enterprise or SMB end-user, VeriWave has a product for you (boy, that sounds like a dreadful marketing cliché). Many of VeriWave’s products are designed for engineers, so their traditional customers have been manufacturers. In this market, they have tools and test suites for myriad functions. For example, they offer MIMO signal generators for testing 802.11 radio and baseband functions; RF interference and DFS pulse generators for testing spectrum analysis and radar functionality; Video, Voice, and QoE test suites for measuring sensitive application performance; roaming platforms for validating your WLAN system’s ability to handle many mobile clients; interoperability products, benchmarking products, RF isolation chambers, and on and on. Then they also have the WaveLab, a facility-for-hire dedicated to real-world stress testing. The common denominator in all of this is that they can do just about everything when it comes to testing, assessing, and validating WLAN behavior; of course, due to the cost, complexity, and time investment required to really use these products properly, many of them appeal primarily to vendors and large enterprise customers with engineering staff.

VeriWave recently (May of this year) expanded their appeal to the common end-user with an application performance validation and assessment tool called WaveDeploy. WaveDeploy really hits the mark with a procedural philosophy that I can confidently promote. First off, the test approach is not underpinned by an ideology of collecting and presenting throughput maximums and other ours-is-better-than-yours data that belongs in a vendor’s marketing document. Taking the polar opposite approach, WaveDeploy doesn’t focus on reporting the maximum (actual) measured data metrics. Conversely, they centralize efforts on identifying how applications actually perform in the network. Sounds too simple, right? The procedure is as follows:

1. Connect the clients (Mobile WaveAgents) being tested to the WaveDeploy server (Fixed WaveAgent). By default, they’ll inherit a “host type,” such as laptop, PDA, VoIP phone, etc. You can change or keep the default host type.

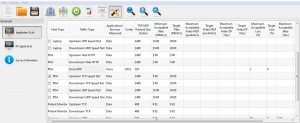

2. Then configure the SLA and RF requirements, such as voice MOS score, TCP downstream throughput, SNR, and packet loss, for your devices and applications (or use the default settings that reflect typical usage and acceptable performance metrics). The graphic shows the application SLAs for a variety of hosts and traffic types.

3. Finally, conduct the test(s), which includes both an aggregate stress test that reflects all WaveAgents competing for the medium at the same time, and a per-application test where each application is independently tested without other competing traffic (at least, none from other WaveAgents). The differentiation of loaded and unloaded tests is helpful for practical analysis of network performance. After each test, WaveDeploy gathers the data for each location being validated.

The test results are displayed on a floor plan, where each network (SSID), client, application type (data, voice, video), RF metric, and specific application receives a color-coded indicator of performance. Green is good, red is bad. The performance quality is based completely on the SLAs previously defined, not some arbitrary assessment of high and low or good and bad. In other words, WaveDeploy is designed from the ground up to identify application requirements and to measure whether a network provides those requirements. It’s a survey process that seeks to answer the question “will my network work for my applications?” by transferring actual application data. Below is a simple sample that I took with my iPhone. You can see that the HTTP download throughput hovered around the 2-4 Mbps mark in different measurement locations. This is below my target performance (5 Mbps), but just above the minimum acceptable (2 Mbps).

In addition to the visible data review on the floor plan, you can export the data for presentation in custom formats (e.g. CSV) or view it in WaveDeploy’s analysis table. These options are flexible for different types of site assessments, including those in which the findings are reported to a project manager or IT management.

All in all, I’m a fan. The interface is pretty simplistic and intuitive. To be fair, I haven’t worked through all the ins and outs of WaveDeploy, nor have I used it with a large number of clients in an enterprise setting. I spent enough time with it to see what it does, how it is organized, and whether there were any glaring flaws or shining advantages. As I focused on in this blog, the important facet that stuck out to me is VeriWave’s focus on application performance with objective, and reasonable, criteria. After all, assessing wireless network performance is VeriWave’s meat and potatoes. So, it’s fair to expect their fundamentals to be sound.

The only nitpick I can point out is that current documentation is a little skimpy in areas that explain what is happening when you run the test. That’s where I got hung up. Since I didn’t know exactly what was happening behind the scenes [until it was explained to me], I didn’t know how to interpret the data. The confusion was primarily related to the way WaveDeploy performs tests and displays results for the aggregate and the individual application tests. That’s important, but it’s an easy fix, and I’m assured that it is already being resolved. They responded quickly to that request. Good stuff.

For more info about WaveDeploy, check out the annexed product-specific site at http://wavedeploy.com/ or see VeriWave’s main site at http://www.veriwave.com/.

Tagged with: VeriWave, WaveDeploy, testing, validation, assessment, survey, WLAN

Blog Disclaimer: The opinions expressed within these blog posts are solely the author’s and do not reflect the opinions and beliefs of the Certitrek, CWNP or its affiliates.

0 Responses to VeriWave’s WaveDeploy: An Introduction and Review

Subscribe by EmailThere are no comments yet.

<< prev - comments page 1 of 1 - next >>

Leave a Reply

Please login or sign-up to add your comment.